Why Do We Behave As We Do?

Average workers (whatever they call themselves – artisans, ‘middle-class’, whatever) work hard all their lives and end up with not much more than when they started. The system is rigged to bring about that result. (It is based on the majority not owning enough to avoid having to work for an employer for a living.) But whenever they have a chance to vote, they vote to perpetuate the system. A clear case of turkeys voting for Christmas. Now why should that happen? Various experiments which were carried out by psychologists at some American universities in the twentieth century may indicate possible answers.

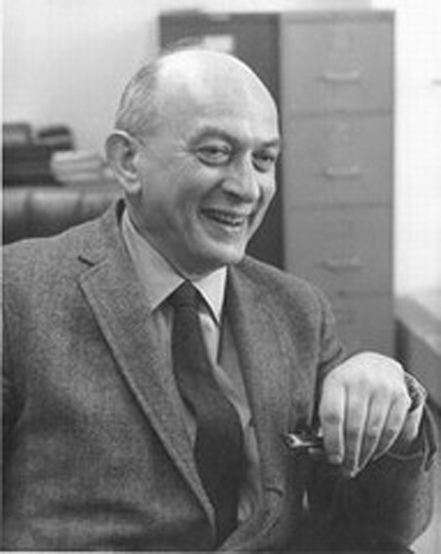

Solomon Asch and social compliance

In 1951 Solomon Asch, a professor at Swarthmore College, Pennsylvania, organized what he called tests of visual judgement. He organized groups of students, eight males in each group. Every group was given two cards: one card had a line drawn on it, and the second card had three lines, a, b, and c. They were asked to decide which of the three lines, a, b, and c, was nearest in length to the single line on the first card. The answer was never difficult: each time one of the lines (whether it was a, b, or c), was clearly the same length as the first card’s line. However, there was a ‘hidden agenda’. Everyone in the group (except one person) knew that the real reason for all this activity was to see how far this single oblivious person would be able to hold to his own (correct) opinion when all the others in the group unanimously chose the wrong answer. Each time it was so arranged that this one unaware person gave his answer last (or next to last). A number of tests were held. The first few times everyone gave the correct answer, and the ‘guinea pig’ had no difficulty in agreeing. But then there came a test when as pre-arranged all the members of the group gave the wrong answer. So when it came to the ‘guinea pig’, he either had to give an obviously incorrect answer, which meant he was agreeing with all the others, or he had to be brave enough to stand out on his own, which meant appearing to criticize what all the others had said. Each group had eighteen tests; in six tests the group’s members all gave the correct answer, so the ‘guinea pig’ would have less reason to be suspicious, or think it was odd that the others were always wrong. The other twelve tests were the ‘critical trials’, where all the group-members, except the ‘guinea pig’, gave the (same) wrong answer; this showed whether the ‘guinea pig’ was self-reliant enough to give the obviously correct answer, or meekly submitted to what everyone else thought. More than one-third of the time the ‘guinea pigs’ simply accepted the group consensus, though that meant going along with a palpable error. (And there was never any significant doubt about what the correct answer was.) Three-quarters of the ‘guinea pigs’ gave at least one answer that they knew to be wrong during the critical trials. Such are the results of the deep-seated desire in most people to be socially amenable. Afterwards, when the real nature of the tests was revealed, most of the ‘guinea pigs’ who gave incorrect answers said they did not really believe what they said, but they went along with the group “for fear of being ridiculed or thought ‘peculiar’.”

Stanley Milgram and obedience

When you look at some of the gigantic atrocities of the twentieth century, you feel that if you tried to describe them to people who had never heard of them, you wouldn’t be believed. Would German workers, employed on the railways, calmly transport closed cattle trucks with thousands of Jews (and others) to concentration camps, where other German workers would obediently usher them to their deaths in the gas chambers? Or take the famine of 1932-3 in the Ukraine, North Caucasus, etc, which Stalin imposed to punish the peasants who were reluctant to enter collective farms: surely Russian workers (even when put into uniform as soldiers) wouldn’t confiscate all the remaining food in an area when they knew the locals would die of starvation? But that’s exactly what happened. Hitler probably killed between ten and twelve million people in the early 1940s: Stalin’s famine killed six to eight million, besides more millions in the Great Purge, the gulags and so on. (The British ruling class decided that British workers would fight valiantly on the same side as Stalin in the Second World War; many British ships were lost, and sailors drowned, in the ‘Arctic convoys’ taking supplies to Murmansk in north Russia.)

Of course many thousands of apparently ordinary citizens were involved in the slaughters organized by both Hitler and Stalin; and another American, Professor Milgram at Yale University, carried out some experiments in the 1960s in an attempt to discover how this could have happened. He advertised for volunteers, who were paid a modest sum for their time, to take part in what he called a learning experiment. These outsiders were each paired with a participant, who really knew what was happening; in each pair one was called a teacher, and one was called a learner. Apparently this was decided by the toss of a coin, but in fact Milgram fixed it so that the outsider was always the teacher, who thought it was a genuine educational exercise, while the learner was a participant. The teacher and the learner were divided by a screen. The teacher, and another participant called a researcher, who was an authoritative figure in a white lab coat, watched while the learner had electrodes fastened to his arms. The teacher and the researcher then retired to a neighbouring position, which was out of sight of the learner, but not out of hearing. There the teacher sat down in front of an ‘electric shock generator’, with a row of switches each of which was clearly labelled from 15 volts, ‘slight shock’, right up in stages to 450 volts, ‘danger, severe shock’. The teacher then asked the learner a series of fairly straightforward questions: which of four words was most closely associated with another given word. The learners kept getting the answers wrong; and each time they did so, the teacher had to administer an electric shock. At each successive wrong answer, the teacher had to give (as he believed) an increased shock. Of course there was no shock in fact, but the learner gave increasing signs of discomfort, going up to screams and agonized shouts of ‘get me out of here’ as the supposed voltage intensified. The teacher naturally became increasingly uneasy, but the researcher kept telling him he must continue, in a predetermined series of official-sounding statements, each successive one more strongly phrased. In the basic original experiment, all the teachers went up to pressing the 300-volt switch, and two-thirds of them pressed the (as they believed) 450-volt switch, ‘danger, severe shock’, despite the sounds of anguish coming from the learner.

The original experiment was subsequently repeated with several different adjustments, to try and find the elements most significant in persuading the teacher to abandon his natural, basic human reluctance to harm another human being, whom he scarcely knew. If the researcher told the teacher that he continued on his own responsibility, the voltage reached was much less; if the researcher said he (the researcher) would take responsibility, the voltage reached was higher. When the researcher wore ordinary clothes, rather than the impressive lab coat, the voltage reached was lower. When the researcher was not actually present, but gave his orders or comments over the telephone, the voltage reached was lower; the same thing happened when the experiment took place in some rundown offices, rather than in the impressive surroundings of Yale University. All these elements, the evasion of responsibility, the persuasive costume, the physical presence, and the imposing environment, all helped to transform an ordinary human being into someone who was prepared to inflict great pain on another individual who was a stranger to him, and against whom he could have had no personal animus. Milgram said: “The extreme willingness of adults to go to almost any lengths on the command of an authority constitutes the chief finding of the study and the fact most urgently demanding attention.” In fact, ‘ordinary people are likely to follow orders given by an authority figure, even to the extent of killing an innocent human being. Obedience to authority is ingrained in us all from the way we were brought up’.

Philip Zimbardo and the prison environment

Later still Professor Zimbardo, of Stanford University, became interested in the psychology of prisoners, and organized the Stanford prison experiment. This was funded by the U.S. Office of Naval Research, which wanted (somewhat naively) to know more about the causes of conflict between military guards and prisoners. According to one website, Zimbardo was ‘interested in finding out whether the brutality reported among guards in American prisons was due to the sadistic personalities of the guards (i.e. dispositional) or had more to do with the prison environment (i.e. situational)’. Zimbardo advertised for male college students, offering a small daily fee. More than seventy applicants were given searching interviews and tests to weed out any with psychological problems, medical disabilities, or any history of crime or drug abuse. Twenty-four were chosen, and were divided into nine prison guards and nine prisoners (with six reserves) by the random toss of a coin. A lifelike jail was built in the basement of the psychology building, with three cells (each with three cots) equipped with heavy barred doors, and a cubby hole to punish prisoners with solitary confinement. A corridor was used as a yard for exercise, and there was a large more luxurious room for the guards. Those designated prisoners were arrested at their homes by the local police force, and processed like all arrestees: handcuffed and searched, booked, finger-printed, a mug-shot taken, and put in a holding cell. All this, said Zimbardo, so the prisoners would suffer the ‘police procedure which makes arrestees feel confused, fearful, and dehumanised’. At the Stanford ‘prison’ the prisoners were stripped naked, searched, and deloused: standard treatment in U.S. prisons, no doubt designed as a first dose of the continuous degradation which God’s own country inflicts on its prisoners. The guards wore khaki uniforms, boots, and mirror sunglasses to prevent eye contact; each had a whistle and a police truncheon. The prisoners wore shapeless smocks and rubber sandals, and were given numbers by which they were always known: both guards and prisoners were forbidden to use names.

An attempted prisoner rebellion on day two was met with spray from a fire extinguisher, with prisoners stripped and losing their mattresses. The further into the experiment, the harsher the guards’ behaviour, the more rigorous the punishments they doled out. For example, sometimes prisoners were not allowed to wash or clean their teeth, were not given food, or were made to do many press-ups on the floor; or they were made to clean the toilet with their bare hands. Later, visits to the toilet were cancelled altogether, with prisoners forced to urinate and defecate into a bucket in the cell – and they were not allowed to empty the bucket. The smell became all-pervasive. Zimbardo said, ‘In only a few days our guards became sadistic and our prisoners became depressed and showed signs of extreme stress . . . as for the guards, we realized how ordinary people could be readily transformed from the good Dr Jekyll to the evil Mr Hyde’ (though none of the guards had shown any sadistic tendencies before the experiment). The prisoners themselves became submerged in their roles, applying for “parole” rather than simply walking out. One prisoner had a nervous breakdown after only thirty-six hours, with ‘uncontrollable bursts of screaming, crying, and anger’; he had to leave the experiment. ‘Within the next few days three others also had to leave after showing signs of emotional disorder that could have had lasting consequences.’ (And all of them had been pronounced particularly stable and normal a short while before.) If they had really been prisoners, they would have had to stay in jail. The exercise showed ‘how prisons dehumanise people, turning them into objects and instilling in them feelings of hopelessness’.

One visitor, a woman who later became Zimbardo’s wife, questioned the morality of the whole exercise. She said that the treatment of the prisoners had become disgraceful (she was the only one among fifty visitors who said that), and the experiment – originally scheduled for two weeks – was stopped after only six days. All the prisoners were glad it was over, but ‘most of the guards were upset that the study was terminated prematurely’. In fact during the exercise ‘no guard ever came late for his shift, called in sick, left early, or demanded extra pay for overtime work’. Two months later one guard gave an interview: ‘I thought I was incapable of this kind of behaviour. I was surprised and dismayed that I could act in such an unaccustomed way’.

In psychological terms, the brutality displayed at Stanford was situational, rather than dispositional.

Who gets the extra biscuit?

One last experiment; several groups, each consisting of three students from Berkeley University, California, were put in a room and given a complex subject to debate. Randomly, one of the three was picked as ‘leader’. After a time, each group was given a plate of four biscuits. In every case, without anyone objecting or even discussing it, the ‘leader’ ate the extra biscuit. By pure chance, one student had achieved a ‘sense of entitlement’, which was never questioned (Times, 28 April).

All of us who live in a capitalist society have to accept that our behaviour is shaped by that society. If anyone feels that the conduct shown in these well-known experiments is acceptable, he or she will no doubt continue to support capitalist society. But some people work for a different society, with different – and preferable – behaviour.

ALWYN EDGAR