Confirmation Bias

A new series of articles about rational thinking – and how to do it better

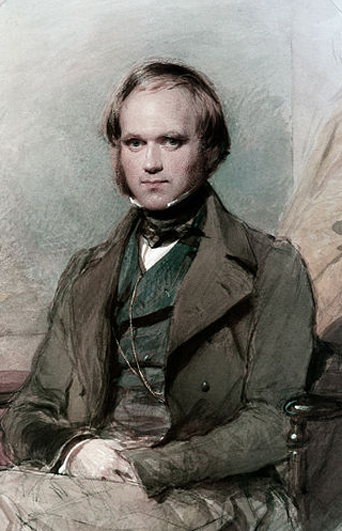

“I had also, during many years, followed a golden rule, namely, that whenever a published fact, a new observation or thought came across me, which was opposed to my general results, to make a memorandum of it without fail and at once; for I had found by experience that such facts and thoughts were far more apt to escape from the memory than favourable ones. Owing to this habit, very few objections were raised against my views which I had not at least noticed and attempted to answer” (Charles Darwin).

In order to be able to effectively evaluate arguments and points of view it is necessary not only to have a good knowledge of logic and logical fallacies but also to have an insight into the psychological processes that contribute to the formation of our beliefs. We all tend to greatly underestimate our own capacity for self-deception. The desire is to see ourselves as fully rational beings but to do so is an act of self-deception. As experimental psychologists have found, we all are subject to cognitive biases and have the capacity to rationalise completely false beliefs. By ‘cognitive bias’ we mean a frequently observed tendency to error that people make in the judgment of information. Some of these effects are caused by mental shortcuts used when making decisions or judgments, these are called ‘heuristics’. Some are the result of motivation, part of an unconscious process in which our brains try to gel all our wants, desires and beliefs into a coherent whole. Over the last few decades a long list of these biases and heuristics has been catalogued.

As Darwin noted, unconsciously or not, we all have a tendency to look for evidence that supports what we already believe and ignore or forget that which does not. This is called the confirmation bias and it is perhaps the most important to know of all the cognitive biases. For example, take the supposed link between a full moon and dramatic changes in human behaviour. Despite there being no significant statistical evidence supporting the claim, the idea still persists in the popular imagination. People remember and recall all the instances that seemingly support the notion and disregard or forget the many more instances that do not. Cases of non-events do not stick as well in the memory. Or consider the results a scientific study. If the conclusion of the study supports a belief that we already hold dear we accept it as a good, solid study. If the conclusion of the study contradicts our cherished belief, we are going to look much more carefully for potential flaws and try to find some way to dismiss it, we may even be less likely to recall it in the future.

Let’s try an experiment. Take the numbers 4, 8, 16 and 32. Try and guess the rule that was used to make the preceding sequence. Now write a sequence of different numbers that you could use to test your theory. Write a second sequence that again could be used to test the theory. Chances are you have just written two sets of doubling numbers. If you have, this is a demonstration of congruence bias, something that can lead to confirmation bias, it is the tendency to test our own theories but not to also test alternative theories. The actual rule was simply that each number be greater than the one preceding it. To test that a theory is true we have to seek to disconfirm it, the mistake was to come up with a hypothesis and then repeatedly look for cases that confirm it rather than testing it against other competing possibilities. Unchecked, this tendency can lead people to firmly hold conclusions that may have no statistical basis in reality.

The tendency towards confirmation bias is an inescapable part of being human. It is so engrained that it was not until the methods of science were fully developed that we had a tool to help counter it. To try to avoid bias in our lives we should take a hint from Darwin and deliberately seek out and take note of what appears to contradict our current beliefs.

DJP